This post is dedicated to economist magazine’s data science crunchers and visualizers who toil week after week to produce high quality material. I have taken liberty with some of their content to show how dedicated they are in their craft. The other organization is the Guardian the newspaper – they have excellent data team.

Context

In week of July 23rd – we will have BigData conference (5th Elephant)right in our backyard – courtesy the hasgeek team. Hopefully attendees will take advantage from the 2 days packed agenda. Right now early bird discount is on. The focus is on tools, processes and best practices. It will have special section on visualization. D3, Excel, process, python will be talked upon and discussed. There is off course much awaited session from Anand.

For me it is not the infrastructure but the questions that we ask of data that matters.Once on that path visualization is great help to unfold the meanings, co-relations, insights. Some tools are better suited than others to convey the “gist”.

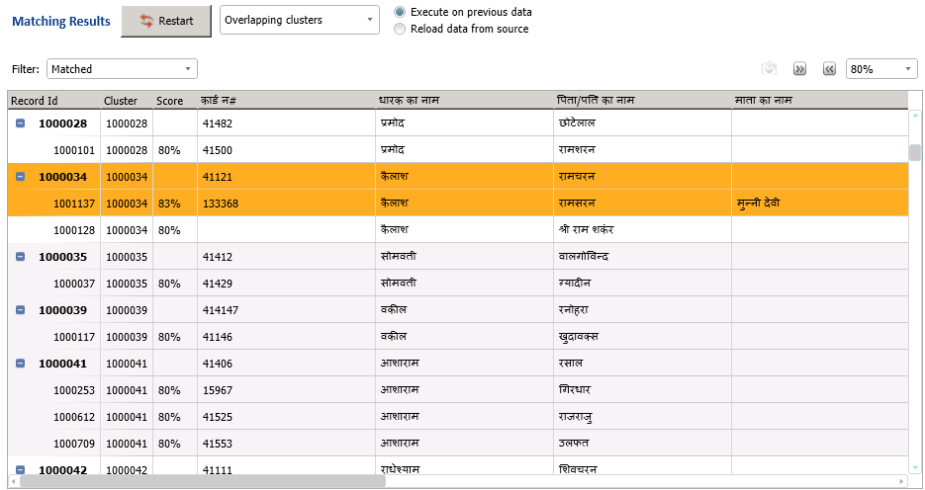

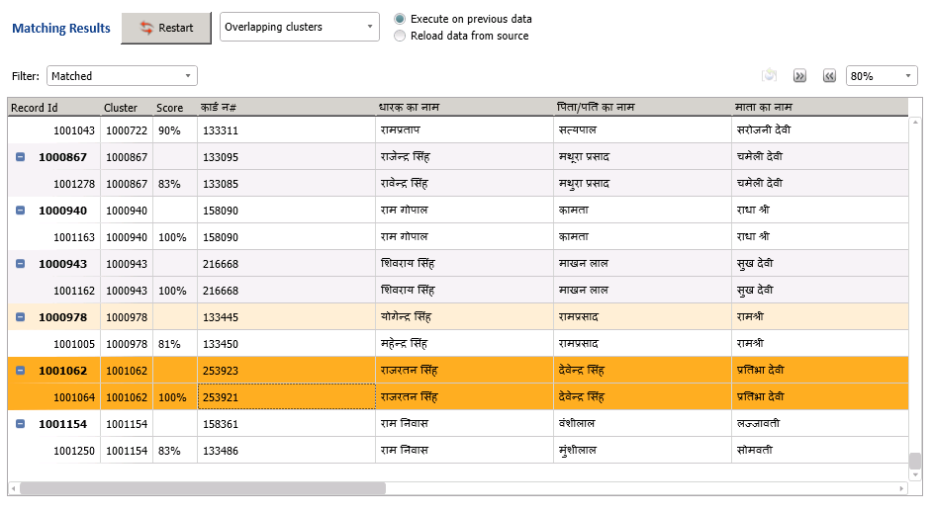

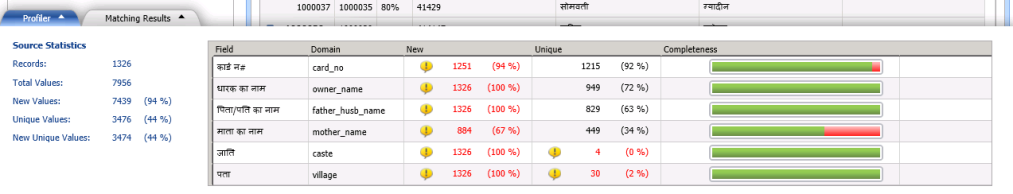

Data visualization is intricately linked to interpretation of the issue. Following are the items from economist magazine where data shines to bring out the issue in open. One of them is an aberration as it takes longer to comprehend the gist. In general most of them get the point across.

The easy ones

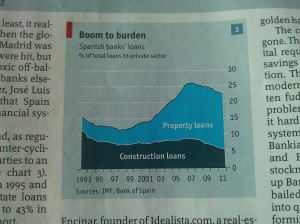

a. Hell Holes is as simple as they come – we have clear title, description on the top and source at bottom. A simple bar chart plots countries against the requirements. White vertical lines accentuate the boundaries. All this helps the viewer grasp the information very-2 quickly. We are made aware that Banks need lot of pull in terms of money if worst comes to worst (Spain was just bailed out)

b.Eat your greens plots the % of gdp as cost of environmental degradation for various developing countries. Latter part is just educated guess and G-20 are not part of the display.

c. Different strokes is classic economist staple chart showing 3 different “metrics” for 4 different countries over same number of years. Simple inference one can draw is that Spain is better of the lot except in employment. It is directly co-related to the construction boom which was present in Spain. Apparently one more graphic also shows how banks lent for property loans more than anything else.

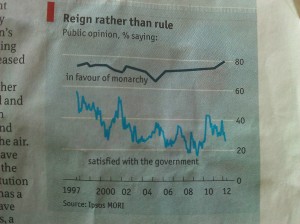

d. Reign rather than rule shows people still like monarchy over their “satisfaction with voted government” in Britain. Country name is missing – but since this graphic is part of the article focusing on monarchy in Britain, I have taken the liberty to provide that information. We see interesting delineation of the century where explicit qualified 4 character full year mention of 1997, 2000, 2012. The years between 2000-2012 are mentioned in 2 characters but we are sure of where this point lies in timeline. There is small vertical line on horizontal axis on either side of the year timeline. I am assuming it just indicates “continuity”. Horizontal lines again provide clarity in terms of the slope of the line.

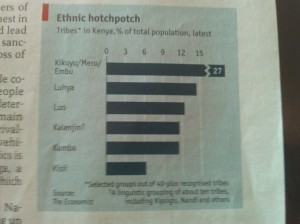

e. Ethnic Hotchpotch in kenya graphic shows how fragmented the country is with various tribes making it up as % of total population. Interesting graphic is the 1st horizontal row depicting the “Kikukyu/Meru/Embu” tribes. It shows a break in the horizontal bar as the graph only accommodates 15 % depiction. Wisely the rest of the proportion is shown outside the 15 % and indicated by the “quantity- in this case 27”. Graphic also clarifies lot of details in terms of “covered data”.

f. Houston graphic shows various city population density per hectare against CO2 emissions(in tonnes) person. The same size circles are scattered all over with white ones pointed out for differentiating them from others. It does bring out the fact that both Texan cities are in bad shape even though people density is very less.

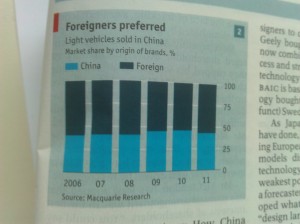

g. Stacked bar chart shows out of the total vehicle sales, folks in china still prefer foreign brands. Here again the background white bars accentuate the data.That slight extra overhang does a beautiful job of helping to differentiate the numerical indicator.

h. Anti social behavior graphic has two graphics – line chart plots two quantities – number of trips, distance. Both of them are going down. The pie chart shows the “reason” for the trip and % change from 1995-97. There two small issues here , title does not indicate the country so it does not stand by itself. Pie chart indicates it is change between two years but reference old year is given as range 1995-97. Shopping trips could have gone down as people do multitasking – as they might pick up groceries while coming back from home. This is made possible if convenience store is near the transportation link. Why visiting friends has gone down is strange but could be explained by social media presence 🙂 ?

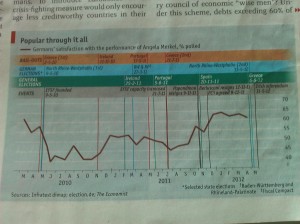

i. This particular graphic plots German chancellor’s popularity across Mar-2010 to May-2012. Events, Bailouts and dates are superimposed to provide deeper context. Angela Merkel’s popularity has never really wavered and gone few notches higher. This corresponds to her handling of Spain and Greece. It did waver during Ireland, Portugal crisis.Although German elections are mentioned it would have helped if “% of seats/representations increase/decrease” was mentioned.

Medium ones

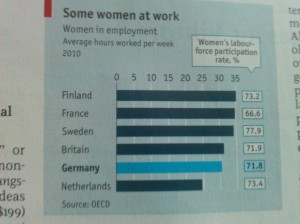

a. Women at work is simpler graphic with addition of numerical on right overhang with Germany pointed out with different color. Data is qualified for the year 2010. This graphic stands on its own to share important information wrt important bill to help women stay back and take care of the family.

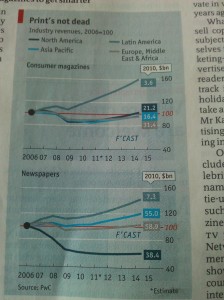

b. This plot shows revenue of magazines, newspapers across 2006-2010 plus forecast till 2015. It also indicates 2011 date is an estimate. 2006 revenue is taken as base of 100 thus bringing out the scale of increase in magazine revenue. This base is differentiated with color of 100 horizontally.Interesting addition is the numerical figure which indicates the sales figure of 2010. The color background of the numerical figure also co-relates the areas.

This has to be the most beautiful representation of the data which conveys that magazine/newspapers are surviving with former doing far better than latter. There is a slight background color change for the period of 2012-2015. (just that touch- again shows the dedication)

c. Stormy seas graphic shows that container shipping industry might be making comeback after real bad last year. The prices might be going up due to lack of capacity or exhaustion of the excess capacity. While reading the article it was clear that container building industry finally stopped building lot of capacity. Last year prices of freights were down with fuel prices were going up. One phrase which stands by itself and is bit confusing “Asian-northern europe centistoke bunker fuel”. This is way too much of “clarification data”. Use of colors to differentiate the 2 y-axis data and the plot is helpful in making the above conclusion.

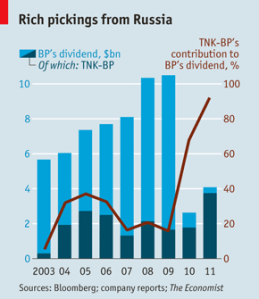

d. TNK-BP shows one important thing – BP is sucking away the dividends from the subsidiary, it has specially extracted a lot last year(close to 90%). Idea of showing the % inspite of the stacked bar is wonderful as it conveys in % the exponential increase. Looks like this subsidiary is going to find its own money next year.

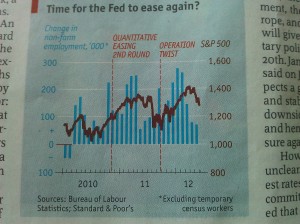

e. Now a graphic which juxtaposes employment as bar charts across month from 2010 Jan to 2012 June.The employment seems to be falling from its peak of 2012 January.S&P too is going down at this juncture.Two specific rounds of “push” are indicated across the vertical.

Both these above graphics show horizontal axis used to indicate two different metrics. This condensation is important to help comprehension.

Tough ones

a. Now comes the most interesting graphic which compresses lot of information and requires concentration from the reader. Right from the word abatement down to heights/width of the bars everything has to be explained and understood in its entirety. Basically as far I could see it is trying to say – cost of abatement of co2 by certain year for certain activity and its potential is so much. I would guess it is called a form of variwide chart?

b. Now to much better analysis and its representation. This confirms economist estimates of slowdown and also indication of lowering of living standard in US. It also is a good attempt to compare the real wages vs buying power across countries. Author of this study has data about wage and burger prices. Economists divide the cost of the Big Mac by the McWage to get “Big Macs per Hour” or BMPH in comparing countries. Two graphics together tell us that us, canada, western europe, japan have similar wages. The developing countries – specifically India earned about 10% of the developed world.In the U.S., BMPH was 2.4 in 2007. McWage is up 26% in four years, but the cost of the Big Mac is up 38%, due to possible increases in food prices. The net 9% drop in US BMPH is one sign of a reduced overall standard of living. In Russia, the BMPH increased 152% from 2000 to 2007 and has increased another 42% from 2007 to 2011. China has had increases of 60% from 2000 to 2007 and another 22% from 2007 to 2011. India saw a large increase of 53% from 2000 to 2007 but BMPH declined by 10% from 2007 to 2011.

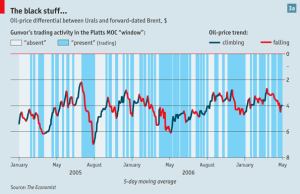

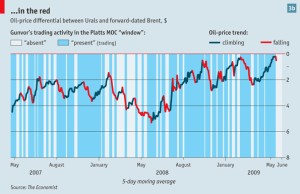

c. Now the most interesting set of graphics which indicates govnor trading was either a signal or simply driving the ural prices(a heavier crude with more sulphur) compared to brent. It does not have the brent data but ural data from Jan 2005- May 2009 is plotted with periods of “guvnor” trading. Most of the time the trend/pattern one can see is that prices fall down. But we need to temper it with brent data to see any co-relation. The conspiracy theory off course is that guvnor bought the oil at lower price and sold it to Europe at higher price. Now …isn’t this great detective work. Apparently this study was done over 2 years.

One common thing you will notice is the red bar for every graphic. Sometimes if article has multiple graphics they also have # in right top. Consistent and concise and all that Data science work proving it is the question which matters and not the tool/infrastructure knowledge. They can be developed/leveraged. Right now many folks are struggling why/when to use BigData(how to get it, store it, analyze it).

Books on visualization –

- Show me the Numbers, by Stephen Few (Amazon)

- The Visual Display of Quantitative Information, by Edward R. Tufte (Amazon)

- The Wall Street Journal Guide to Information Graphics, by Dona M. Wong (Amazon)

- Visualize This!, by Nathan Yau (Amazon)

- Information Visualization, by Colin Ware (Amazon)

- How Maps Work, by Alan M. MacEachren (Amazon)

- The Back of the Napkin, by Dan Roam (Amazon)

At times visualization clutters up the information and leaves the reader confused. This is one case of NYtimes. How do the middle & top quintile person compare? Sometimes just plain representation of data is wrong – How can accuracy be 120% – in case of paper on analysis of liver issues. So overdoing it, doing it for sake of it is not advisable. That is why I like economist’s use of plain graphics. They get point across without cluttering up too much.

Although there are many visualization websites – this is neat.