We use datastores generally to ingest data and try to make some meaning out of it by means of reports and analytics. Over years we have had to make decisions in terms of adopting different stores for “different” workloads.

Simplest being the Analysis – where we offload to pre-aggregated values with either columnar or distributed engines to scaleout the volume of data. We have also seen rise of stores which allow storage of data which is friendly for range of data. Then we have some which allow very fast lookups, maturing to doing aggregations on run. We have also seen use of data-structure stores – the hash table inspired designs vs the ones which don sophisticated avatars (gossips, vector clocks, bloom filters, LSM trees).

That other store which pushed compute to storage is undergoing massive transformation for adopting streaming, regular oltp (hopefully) apart from its regular data reservoir image. Then we have the framework based plug and play systems doing all kind of sophisticated streaming and other wizardry.

Many of the stores require extensive knowledge about the internals of the store in terms of how data is laid out, techniques for using right data types, how data should be queried, issues of availability and taking decisions which are generally “understandable” to the business stakeholders. When things go wrong – the tools differ in range from just log error to actual “path of the execution” of the query. At present there is lot of ceremony for thinking about the capacity management, issues around how data changes are logged and should be pushed to another location. This much of detail is great “permanent job guarantee” but does not add lot of value in long term for the business.

2014-22nd Aug Update – DocumentDB seems to take away most of the pain – http://azure.microsoft.com/en-us/documentation/services/documentdb/

- Take away my schema design issues as much as it can

What do I mean by it? Whether it is traditional relational databases or the new generation no-sql stores. One has to think through either ingestion pattern or the query pattern to design the store representation of entities. This by nature is productivity killer and creates impedance mismatch between storage and representation in application of the entities.

Update (2014-22nd Aug) – DocumentDB – need to test for good amount of data and query patterns but looks like – with auto-indexing, ssd we are on our way here.

- Take away my index planning issues

This is another of those areas where lot of heart burn takes place as lot of innards are exposed in terms of the implementation of the store. This if done completely automagically would be great-2 time-saver. Just look at the queries and either create required indexes or drop them. Lot of regression issues for performance are introduced as small changes start accumulating in the application and are introduced at database level.

Update (2014-22nd Aug) – DocumentDB does it automatically , has indexes on everything. It only requires me to drop what I do not need. Thank you.

- Make scale out/up easier

Again this is exposed to the end application designer in terms of what entities should be sharded vertically or horizontally. This ties back to 1 in terms of queries ingestion or query. This makes or breaks the application in terms of performance and has impact on evolution of the application.

Update (2014-22nd Aug) – DocumentDB makes it no brainer again. Scaleout is done in CU. Need to understand how the sharding is done.

- Make the “adoption” easier by using existing declarative mechanism for interaction. Today one has to choose the store’s way rather than good old DDL/DML which is at least 90% same across systems. This induces fatigue for ISVs and larger enterprises who look at cost of “migration back and forth”. Declarative mechanisms have this sense of lullaby to calm the mind and we indulge in scaleup first followed up scaleout (painful for the application).

Make sure majority of the clients are on par with each other. We may not need something immediately for a rust. But at least ensure php, java, .net native and derived languages have robust enough interfaces.

Make it easier to “extract” my data in case I need to move out. Yes I know this is the least likely option where resources will be spent. But it is super-essential and provides the trust for long term.

Lay out in simple terms roadmap – where you are moving so that I do not spend time on activities which will be part of the offering.

Lay out in simple terms where you have seen people having issues or wrong choices and share the workarounds. Transparency is the key. If the store is not good place for doing like latest “x/y” work – share that and we will move on.

Update (2014-22nd Aug) – DocumentDB provides SQL interface !

- Do not make choosing the hardware a career limiting move. We all know-stores like memory. But persistence is key for trust. SSD/HDD, CPU/Core, Virtualization impact – way too much of moving choices to make. Make 70-90% scenarios simple to decide. I can understand some workloads require lot of memory or only memory – but do not present swarm of choices. Do not tie down to specific brands of storage or networking which we cannot live to see after few years.

In the hosted world – pricing has become crazier – Lay out in simple to understand terms how costing is done. In a way licensing by cores/cpu was great because I did not have think much and pretty much over-provisioned or did a performance test and moved on.

Update (2014-22nd Aug) – DocumentDB again simplifies the discussion, it is SSD backed and pricing is very straightforward – requests – not reads, not writes or indexed collection.

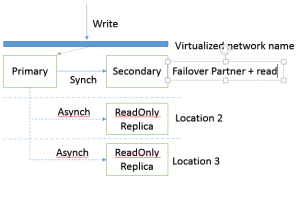

- Resolve HA /DR in reasonable manner. Provide simple guide to understand hosted vs host your own worlds. Share in clear manner how should the clients connect, failover. We understand Distributed systems are hard and if store supports distributed world – help us navigate the impact, choices in simple layman terms or something we are already aware of.

If there’s an impact in terms of consistency – please let us know. Some of us care more about it than others. Eventual is great but the day I have to say – waiting for logs to get applied so that reports are not “factual” is not something I am still gung-ho about.

Update (2014-22nd Aug) – DocumentDB – looks like in local DC it is highly available. Assuming cross DC DR is on radar. DocumentDB shares available consistency levels clearly.

- Share clearly how monitoring is done for the infrastructure in either hosted/host your own cases. Share a template for “monitor these always” and take these z actions – sort of literal rulebook which makes again makes adoption easier.

Update (2014-22nd Aug) – DocumentDB provides oob monitoring, need to see the template or the 2 things to monitor – I am guessing latency for operation in one and size is another. I need to think through the scaleout unit. I am sure more people push – we will be in better place.

- Share how data at rest, data in transport can be secured, audited in simple fashion. For the last piece – even if actions are tracked – we will have simple life.

Update (2014-22nd Aug) – DocumentDB – looks like admin/user permissions are separate. Data storage is still end developer responsibility.

- Share simple guide for operations, day to day maintenance – This will be a life saver in terms of x things to look out for, do backups, do checks. This is how to do HA, DR check, performance issue drilldown – normally part of the datahead’s responsibility. Do we look out for unbalanced usage of the environment? IS there some resource which is getting squeezed? What should we do – in those cases?

Update (2014-22nd Aug) – DocumentDB – looks like cases when you need older data because user deleted something inadvertently is something user can push for.

Points 1-4 make adoption easier and latter help in continued use.